In the world of digital products, user experience is everything & understanding that experience starts with tracking the right data. At OLX India, where millions of users interact daily, traditional event tracking systems couldn’t keep up. Limited by scale, flexibility, and rising costs, we needed something better—something built for our unique challenges. Enter EventHouse, our in-house, end-to-end event tracking platform that captures every click, swipe, and scroll, helping us uncover powerful insights and build seamless user journeys. In this blog, we’ll walk you through how EventHouse revolutionised our approach to data, analytics, and decision-making one event at a time.

Ever wondered how apps evolve to create effortless user experiences? Let’s dive in.

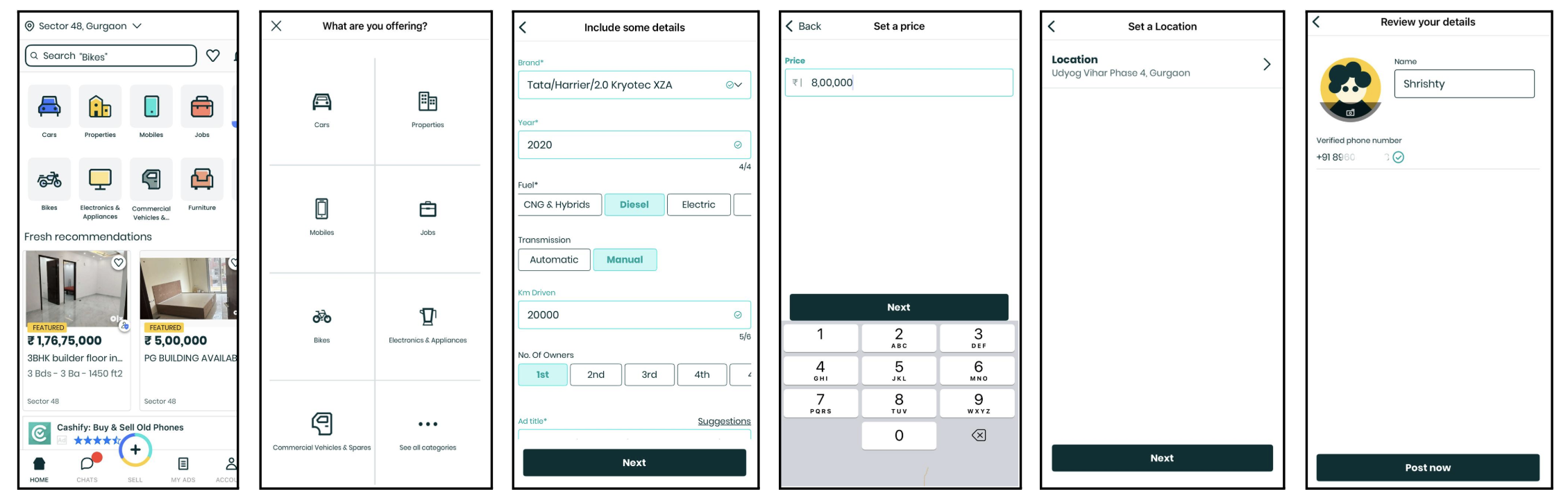

Imagine this: You’re a seller trying to list your product. You take photos, pick a category, choose a location, and finalise your post.

Sounds simple, right? But what if something along the way frustrates you? You hesitate, and you drop off without ever listing your item. Let’s say our system only tracks the first and last steps. We know some users abandon the process, but we have no clue where or why. Are they struggling with the image upload? Is category selection confusing? Without the right data, we’re left guessing instead of improving. But what if we collect insights at every step? Suddenly, patterns emerge. We pinpoint the exact friction points, smooth them out, and watch conversion rates soar.

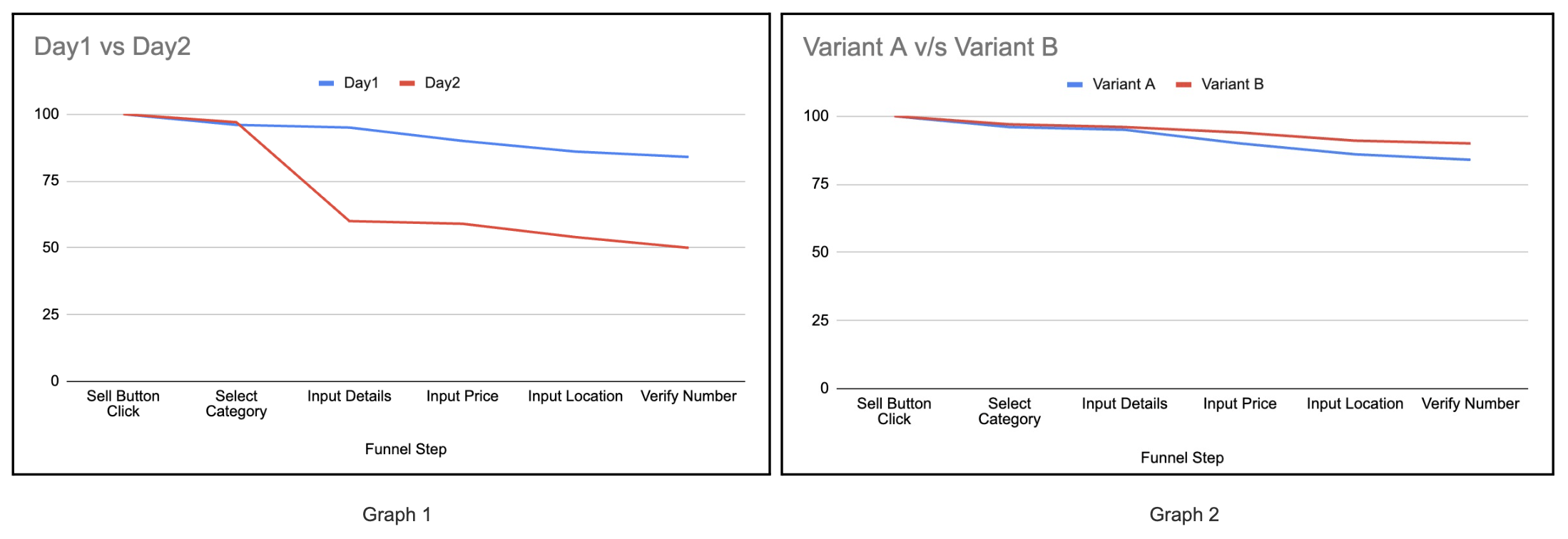

The graphs below reveal user drop-offs at each step of the ad posting process. By analysing these trends, we can identify friction points and improve the seller experience for higher conversions.

1. Day to Day: Seller Drop-Off Trends: A sharp decline on Day 2 highlights potential issues affecting ad completion rates.

2. Variant A vs Variant B: A/B Testing Insights: Comparing two versions of the seller flow to determine the better-performing user journey.

Let’s understand events and their tracking to make further discussions more meaningful.

An event refers to any user action or system-generated activity that occurs within a digital application, website, or platform. Events capture interactions like clicks, scrolls, form submissions, video plays, purchases, or even backend processes such as API calls or data updates.

Event tracking is the process of capturing, storing, and analysing these events to understand user behaviour, optimise experiences, and improve business outcomes. This is done using analytics tools, tracking pixels, or event-driven architectures.

Why Event Tracking Matters

Every action a user takes on a digital platform, clicking a button, filling out a form, or abandoning a cart, is an event. Event tracking helps businesses understand user behaviour, optimise funnels, and make data-driven decisions. But what happens when your event-tracking system has limitations? You miss critical insights, and optimisation becomes guesswork rather than a strategic approach.

The Challenge with Existing Event Tracking Systems

Many businesses rely on third-party event-tracking tools to monitor user interactions. These platforms offer pre-built solutions but often come with restrictions on the number of events, data storage constraints, and high costs.

At OLX India, we serve over 3M+ daily active users (DAUs) and 30M+ monthly active users (MAUs). Our existing event tracking system allowed us to track only specific events per day, far too low to capture the complete user journey. This restriction meant that critical user interactions went untracked, making it difficult to optimise funnels, reduce drop-offs, and make informed business decisions.

This is where EventHouse comes in.

Rather than relying on costly third-party tools, our engineering team built EventHouse a scalable, efficient, and cost-effective event-tracking platform tailored to our needs.

By optimising every stage, from event collection to processing and storage, EventHouse now provides limitless event tracking, empowering product and marketing teams to refine user experiences confidently.

How is EventHouse Improving Our System?

- Enhanced User Journey Analysis: By collecting comprehensive event data, we can analyse user funnels more deeply, identifying pain points and areas for improvement. Unlike Google Analytics (GA) and Clevertap, which impose limitations on using event parameters, EventHouse allows us to track and analyse events with rich contextual data, providing a clearer picture of user behaviour.

- Deeper Data-Driven Decision Making: One of GA’s key drawbacks is the inability to use raw event data for analysis, limiting deeper insights. With EventHouse, we can directly access raw event data, enabling us to join it with transactional data in our database to uncover meaningful trends and patterns. This unlocks advanced analytics capabilities that were previously restricted.

- Improved Revenue and Conversions: Understanding user behaviour at a granular level allows us to refine strategies that drive revenue growth, improve user engagement, and boost paid user conversions. Since EventHouse provides unsampled data, we ensure accurate insights, something not always possible with external analytics platforms.

- Automated and Custom Reports: EventHouse allows us to build automated reports, eliminating manual effort and making analytics more efficient. With real-time tracking and dashboarding capabilities, teams can instantly access the insights they need, without the delays and constraints of third-party tools.

- Scalability and Cost Efficiency: Unlike Clevertap, which becomes increasingly expensive as event tracking scales, EventHouse allows us to track unlimited events at a fraction of the cost. This ensures we can expand our analytics capabilities without financial constraints, making it a more sustainable and budget-friendly solution.

- The Next Holy Grail of Analytics: EventHouse is not just another analytics tool—it has the potential to become our single source of truth for accurate, unsampled event data. By overcoming the limitations of GA and Clevertap, we now have a powerful system that ensures scalability, flexibility, and deeper insights, giving us a competitive edge.

With EventHouse in place, we will have a powerful tool to collect and leverage data for improving our product, enhancing user satisfaction, and driving business growth.

How Are We Collecting Data In-House?

EventHouse SDK is a cross-platform tool (Android, iOS, Web) for efficient and scalable event tracking. It standardises event collection, enabling data-driven decisions. Configurations are fetched via an API with ETag support, cached in memory and locally persisted. Events are batched and sent asynchronously with thread-safe processing. Sessions use UUIDs and start anew after 30 seconds of inactivity. Designed for minimal overhead and seamless integration.

Collecting Events from Apps

We have developed an innovative event collection & ingestion system that is highly cost-efficient to run and efficiently collects, processes, and stores event data in Amazon S3 for downstream Data Engineering (DE) pipelines.

1. Client SDK: Smart Event Collection and Compression

The first step in our pipeline is the event collection process. We’ve developed a dynamic Client SDK that can be configured to collect a flexible number of events from client applications. What sets this SDK apart is its ability to send bulk events in a compressed format. By using compression, we’ve significantly reduced the amount of ingress traffic and saved 90% in costs related to Application Load Balancer (ALB) usage. This approach ensures that we can handle massive event streams without overwhelming the network or incurring steep costs.

2. Application Load Balancer (ALB): Efficient Traffic Management and Secure Routing

Once events are sent from the client, they pass through the Application Load Balancer (ALB). The ALB plays a critical role in managing the incoming traffic. It balances the load by distributing requests across backend services, ensuring optimal performance and preventing any one server from becoming a bottleneck. Additionally, the ALB acts as an API Gateway, securely routing requests to appropriate backend services. Without the ALB, direct transmission of logs to backend servers could lead to performance bottlenecks, making the infrastructure inefficient.

3. Nginx Server: Event Processor & Aggregator

After the ALB, the logs are forwarded to Nginx, which acts as a lightweight reverse proxy and log collector. Nginx is deployed as Kubernetes pods to manage high availability and scalability. It runs scripts to collect event logs and store them in event files. These files are rotated based on size to ensure efficient log management. By utilising Nginx in this way, we can efficiently handle incoming requests, process them quickly, and log them for future analysis without overloading the system.

Cost Efficiency & Scalability of Nginx Servers – One Nginx Server can handle up to 1M RPM, with added event processing logic, our servers are capable of handling more than 300K RPM with a single CPU and 1G of Memory. Which makes this system design highly cost-efficient.

4. FluentBit: Event Distributor

Next in the pipeline is FluentBit, a powerful and lightweight data processor & distributor. FluentBit picks event data files from Nginx Servers, compresses them and sends them to Amazon S3 at regularly defined intervals and defined file path format. By aggregating events and processing them before storage, we’re able to make data storage more efficient and improve overall ingestion performance down the line.

5. AWS S3: Scalable and Reliable Event Storage

For storing event data, we leverage AWS S3, a highly scalable and reliable object storage service. The events are stored in compressed JSON Lines format (.json.gz), which offers a balance of human readability and storage efficiency. While formats like Parquet offer better query performance and compression, JSON lines remain our choice for easy debugging and log inspection. The compression helps reduce storage costs while keeping the data accessible and efficient for processing.

- Compression and Storage Efficiency: Each event is individually compressed using the GZIP (.gz) format, which allows us to reduce storage space while keeping the data easily accessible. This compression approach not only minimises storage costs but also accelerates data transfer when accessing logs or moving them through different stages of the pipeline.

- Data Partitioning: Optimising for Future Growth: We partition the stored event data by date (Year/Month/Date/Hour). This partitioning strategy brings several benefits:

- Simplified Data Management: It becomes easier to retire historical data or manage storage tiers over time.

- Enhanced Query Performance: If we choose to adopt services like Amazon Athena for querying, this partitioning structure will already be optimised for high performance.

- Faster Debugging: By organising data in this way, debugging intermittent issues becomes simpler, as we can quickly reload or inspect data from specific periods.

Why Does This Architecture Make Sense?

This architecture is designed to be both scalable and highly cost-efficient. Each component is optimised to handle increasing volumes of data while minimising operational costs. By compressing event data early in the pipeline, using load balancing for traffic management, and structuring data storage intelligently, we’ve built a system that can grow with our needs without breaking the bank.

Compression at Every Step: From client SDK to storage, data is compressed, reducing storage costs and speeding up data transfer.

Scalable Infrastructure: With Kubernetes, Nginx, and AWS services, the system can easily scale to accommodate future growth in data volume and complexity.

Low-Cost Server: One Nginx Server is capable of processing over 500K RPM, providing a highly optimised and cost-efficient solution for request processing for event tracking systems.

Real-Time Processing: FluentBit allows us to preprocess data in real-time, improving ingestion speed and storage efficiency.

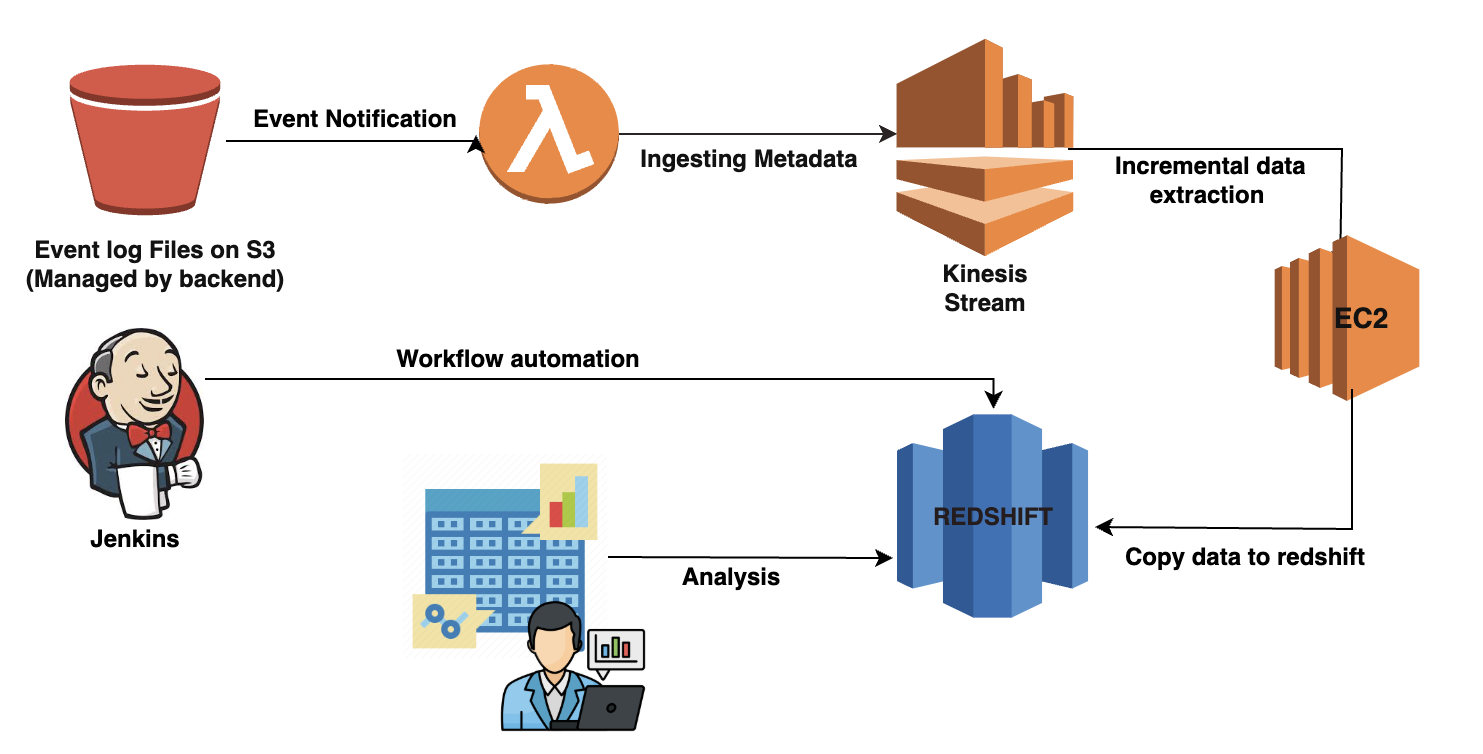

Data Pipeline to bring event data to Redshift

At OLX India, handling millions of events daily requires an intelligent and efficient data pipeline. It all begins with event and log files arriving in S3, a reliable and cost-effective storage solution designed for scalability. As soon as a new file lands, AWS Lambda springs into action, detecting the update in real time and setting the pipeline in motion without any manual intervention.

Instead of moving large files through the system, Lambda extracts only the metadata and streams it into Kinesis. This ensures that processing remains lightweight, fast, and highly efficient, even when dealing with high-velocity data. The actual files remain securely stored in S3, ready to be accessed when needed.

From there, a Python ETL pipeline continuously listens to Kinesis, fetching metadata and using it to retrieve the raw files from S3. These files are then seamlessly loaded into a Redshift staging table, allowing for structured validation and transformation before they reach the final production tables. This extra step ensures data integrity, preventing inconsistencies and optimising performance for analytics and reporting.

To maintain seamless operations, a robust checkpointing system tracks the last processed file, preventing duplication and enabling easy recovery in case of failures. At the heart of this operation, Jenkins orchestrates the entire workflow, automating scheduling, execution, and monitoring while sending real-time alerts.

This worked so well for us that the staging tables we created were actually used as a view directly into S3 files for testing the entire data flow. The eventhouse tables in Redshift follow a monthly partitioned naming convention, where the suffix represents the year and month. This helps us get rid of data that is too old without having to run computationally heavy vacuum jobs on a single table over and over again.

Key Takeaways

Thanks for showing interest in our article on EventHouse. Building our own event tracking platform has empowered OLX India with greater flexibility, reliability, and cost efficiency. EventHouse has helped streamline data ingestion, validation, and processing at scale. It’s been a rewarding experience that sharpened our engineering skills and aligned our tools more closely with business needs. We hope our journey offers insights to others considering similar paths. Stay connected for more updates from the OLX India tech team!

Acknowledgements

Development Contributions –

- Data Engineering: Navdeep Gaur, Shrishty Srivastava

- Backend: Parvez Hassan

- Android/iOS: Ravi Rawal, Sandeep Chhabra

- PWA: Yash Chitlangia, Anshul Bansal

- DevOps: Kishan Tiwari

- Analytics: Jatin Pal Raina

- QA: Rathod Singh, Gaurav Jolly

- Engineering Manager (DE): Hitesh Kumar

- Engineering Manager (Platform): Hitesh Das