Here are some insights into how we built a highly scalable media server from scratch in just one month.

The Media-Server processes over 650M requests daily, with peaks reaching more than 15,000 image fetch requests per second, featuring real-time image processing like watermarking and resizing. It also efficiently serves document and audio media files.– April, 2024

Why do we have to build a media server now? –

- The demerger of OLX India from OLX Group in 2023 necessitated migrating India-specific infrastructure from the common OLX Group platforms.

- Previously, OLX utilized the group’s media server for serving all media assets such as images, documents, and audio files.

- We developed an in-house media server solution to gain more control and mitigate high licensing fees.

Requirements we had while building the Media Server –

- Handle uploading and fetching images, documents, and audio files.

- Serve images in custom resolutions in real-time as per client request.

- Images should have an OLX Logo watermarking, which should be dynamic. (For example – if someday the logo changes, all existing images should start reflecting the new brand logo).

- Support all common formats of images, documents and audio files.

- Support Audio Transcoding to transcode audio files in MP3/MPEG formats while serving.

An Idea about the scale we are operating at –

At OLX India, we handle over 650M daily fetch and upload requests, with peak throughput surpassing 15,000 requests per second. Our storage requirement reaches about 600M objects and more than 70 TB in disk space.

While building the media server, we encountered numerous challenges due to our scale and requirements –

- Designing a system capable of efficiently serving large volumes of media content.

- Providing real-time image processing capability with watermarking and custom resolutions.

- Ensuring a seamless rollout without platform downtime.

- Managing a massive migration of data and traffic without impacting user experience.

- Prioritizing essential features, including supporting existing API contracts and functionalities like authorization, dynamic image processing, audio transcoding, and upload via Image URLs, encoding, and bulk operations.

- Build with the plan for the optimized cost for running & maintaining the server.

- Build for quality, with proper tests, coverage, great performance and top-notch user experience.

- Make sure the team is not overburdened with work due to strict timelines.

Plan of Action and Delivery –

- Break down of features into three levels of priorities — p0, p1 and p2.

- Start building features that fall in the p0 level.

- Building system with backward compatibility with older system.

- Build a plan for a rollback strategy that can help in rollback to the older version in case of any major outbreak with new service (The rollback strategy is to avoid bad user experience or long-lasting system outrage on the platform.)

- Plan for continuous integration of code and features

- Plan for quick rollouts via frequent releases in chunks.

- Plan for data migration strategy in parallel with code development.

Technical Insights –

- Core Media Service –

Our core microservice, built on Java Spring Boot and deployed on Amazon’s Elastic Kubernetes Service (EKS), offers a robust suite of REST APIs for seamless media content upload and retrieval. Guided by principles such as Clean Code, REST Standards, Clean Code Architecture, Domain Driven Design, and Test-Driven Development (TDD), we ensure a maintainable, scalable, and reliable system.

- Object Storage –

For storing media assets, we utilize AWS S3 Object Storage, leveraging its high scalability and high reliability.

- Database –

MySQL serves as the backbone for storing metadata related to our media assets, offering robust performance and reliability. Its proven track record in handling large datasets and fast-read queries makes it an ideal choice for our database needs.

- AutoScaling –

We are using Kubernetes with autoscaling to ensure we can serve high amounts of traffic cost-effectively. Kubernetes with autoscaling ensures efficient resource utilization, dynamically adjusting server capacity to match traffic demands, and optimizing costs.

- Dynamic Image Processing –

To provide images in different resolutions and watermarks, we are using an open-source image processing server written in RUST which we have deployed internally to process images at the time of fetching.

Image processing tasks are quite CPU intensive hence we decided to pick a library written in either GO or RUST which are highly perfomant languages and provide top-notch performance with the least resources.

The image server takes an image with formatting parameters based on which it transforms the image. Once an image is processed it gets cached on CDN to make sure we do not process the same images again and again.

Question – Why are we not pre-processing the images and saving them in object storage instead of doing real-time processing that will make the first fetching slow?

Yes, you are correct. Doing real-time image processing will make the first fetch slow but still we didn’t chose pre-processed images approach becasuse –

- It will increase storage space by N+1 times. (N is the number of different resolutions you need to support at a given time.)

- For example – If you have 10 images and 5 resolutions – The total number of images that you will need to store in your storage will be 10*5 + 10 (original images) = 60 Images.

- In addition to that – Whenever you have to add support for a new resolution, you will have to re-process all existing media assets again to convert them and save them in your object storage. Which will cost you a lot considering you are doing it at a high scale.

- At the time when the watermark changes, you will have to change all existing images to add the new watermarks

Considering that we have more than 550M assets in our system and we need images in multiple sizes for different devices and screens, this approach doesn’t make sense to replicate these many assets into N+1 times. And to do re-processing at the time of watermark change or new resolution additions will not be a efficient approach.

Hence we opt for real-time image processing with CDN Caching for serving our media content.

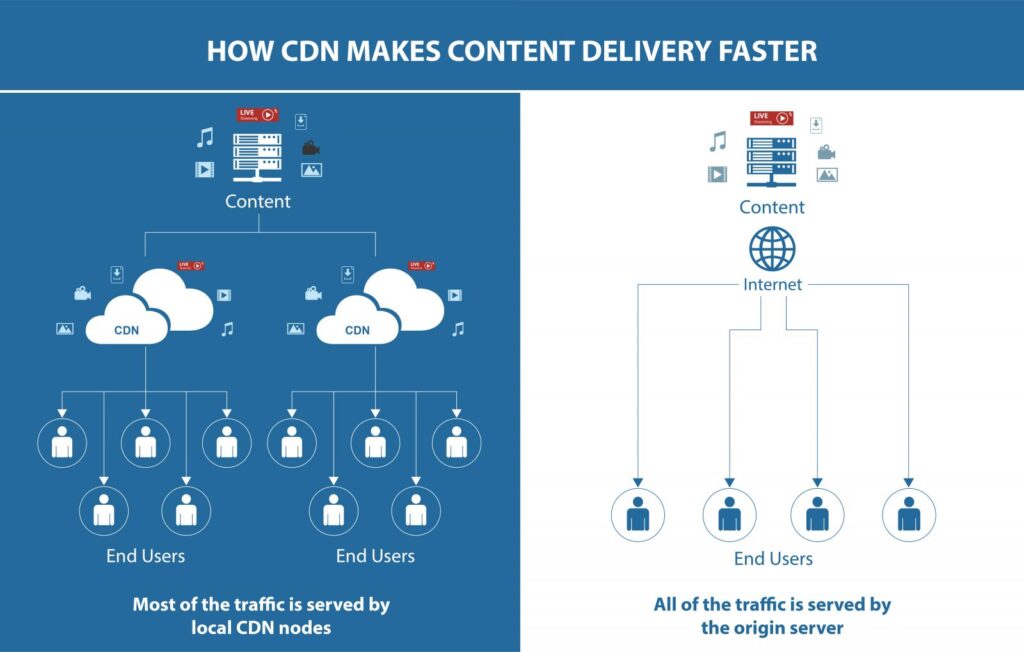

- CDN (Content Delivery Network with Caching) –

A content delivery network (CDN) is a group of geographically distributed servers that speed up the delivery of web content by bringing it closer to where users are.

CDNs rely on a process called “caching” that temporarily stores copies of files in data centres across the globe, allowing you to access internet content from a server near you. Content delivered from a server closest to you reduces media load times and results in a faster, high-performance web experience. By caching content in servers near your physical location.

Currently, we are using Akamai as our CDN partner.

Key Learnings –

- Always define your clear ‘MUST HAVE’ and ‘GOOD TO HAVE’ Features, start with must-haves and then move to good-to-have ones.

- KEEP IT SIMPLE and Stupid, we engineers often try to overcomplicate things and keep things as simple as possible without over-engineering them.

- Always plan for RELEASE along with a Rollback strategy, our rollback strategy gave us huge peace of mind while releasing, we knew at any time if we failed we could always roll back to the older system and avoid any user experience issues or downtime on our platform.

- Plan and design thoroughly with Performance and Cost at Scale in mind while building core tech decisions as they are often difficult to alter later.

- Build with Unit Tests & Integration Tests in place to avoid manual testing efforts and faster feedback cycles on new changes. Use TDD for quick feedback cycles.

- Adopt TBD — Trunk-Based Development approach for faster code integration.

- Use Feature Toggles-based development, which will help you with early code integration and you can enable or disable flows as per feedback and needs.

- Look out for open-source solutions before reinventing the wheel.

- Build a great team, which can deliver quality products in quick time.

Acknowledgements

Development Contributions – Parvez Hassan, Utsav Khanna, Shourya Kohli.